Topics:

Marketing StrategySubscribe now and get the latest podcast releases delivered straight to your inbox.

I'd like to think that I have a great deal of patience.

I'd like to think that I have a great deal of patience.

(I mean, I taught my mom how to use an iPhone, so that's got to count for something, right?)

However, when it comes to A/B testing, it seems as though my willingness to wait goes out the window.

Eager to report on my findings, I find myself refreshing the page insistently. But truth be told, the key to effective A/B testing is to give the test the time it needs to run it's course.

In other words, prematurely putting a cap on it could cost you the validity of the experiment. And conversely, waiting too long to report on the results could sway the data just the same.

So when should you conclude an A/B test?

We've dug into our own experiences (and the advice of others) to help answer this question for once and for all.

The dangers of concluding too early

Before we get into real numbers, it's important to define what's at stake.

Often times marketers will begin to spot what they think is a trend in the data after just a couple of days and close the test.

Let's make one thing clear, "just a couple of days" is rarely enough time to draw any significant conclusions about which variation performed best.

Test results can change very drastically, very quickly.

To help paint this picture, check out this example from ConversionXL. The following details the results of an A/B test just two days after launch:

As you can tell, the control was crushing the variation. There was a 0% chance that it would outperform the control.

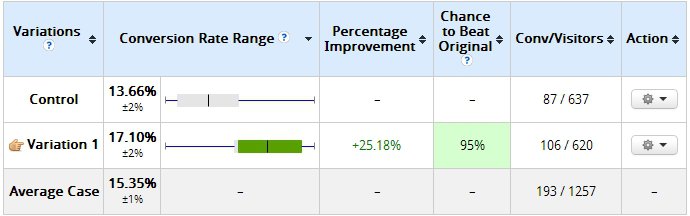

Here's a look at the results after 10 days:

Looks like that 0% chance turned into a 95% chance really fast.

Point being, had they concluded the test early on, the results would have been totally invalid.

How to make the right call

While it's tough to definitively say, "You should run your test for X days", there are a few ways to come about a sound ending point for your test.

In fact, The Definitive Guide to Conversion Optimization by Neil Patel and Joseph Putnam defines the following guidelines for determining when to end a test:

- You should run a test for at least 7 days.

- There should be a 95% (or higher) likelihood of finding a winning version.

- You should wait until there are at least 100 conversions.

However, as we mentioned, there's no "one size fits all" approach when it comes to defining a concluding point.

In a recent "unwebinar" with our friends at Unbounce, our marketing director, John Bonini revealed that we typically run our tests for anywhere between 30-90 days, depending on the amount of traffic that we're driving to the variants. For example, if you're driving millions of people to your pages, it's likely that the time between launch and conclusion will be far shorter than a website that is only bringing in a couple thousand views a day.

While there are "statistical significance" calculators out there to help you determine whether or not it's time to call it quits, we urge you to proceed with caution.

I, too, was excited about the functionality of these tools upon discovery, however, after reading into a bit I found that sources were reporting that these tools often call tests too early, putting you at risk of disrupting the legitimacy of the results.

Free Assessment: